Hi Folks! In this post I am going to demonstrate how the main ORMs: EF Core, NHibernate (in the .NET Framework unfortunately, I couldn't make the application run with the .NET Core version of it) and pure ADO.NET for good measure, deal and perform with a huge dataset, a TSV text file filled with more than 41M rows (17M rows for the Titles, 13M rows for the Professions and 11M rows for the main table). So, let's start with the TSV text file (where does it come from, in what format it's in, etc.).

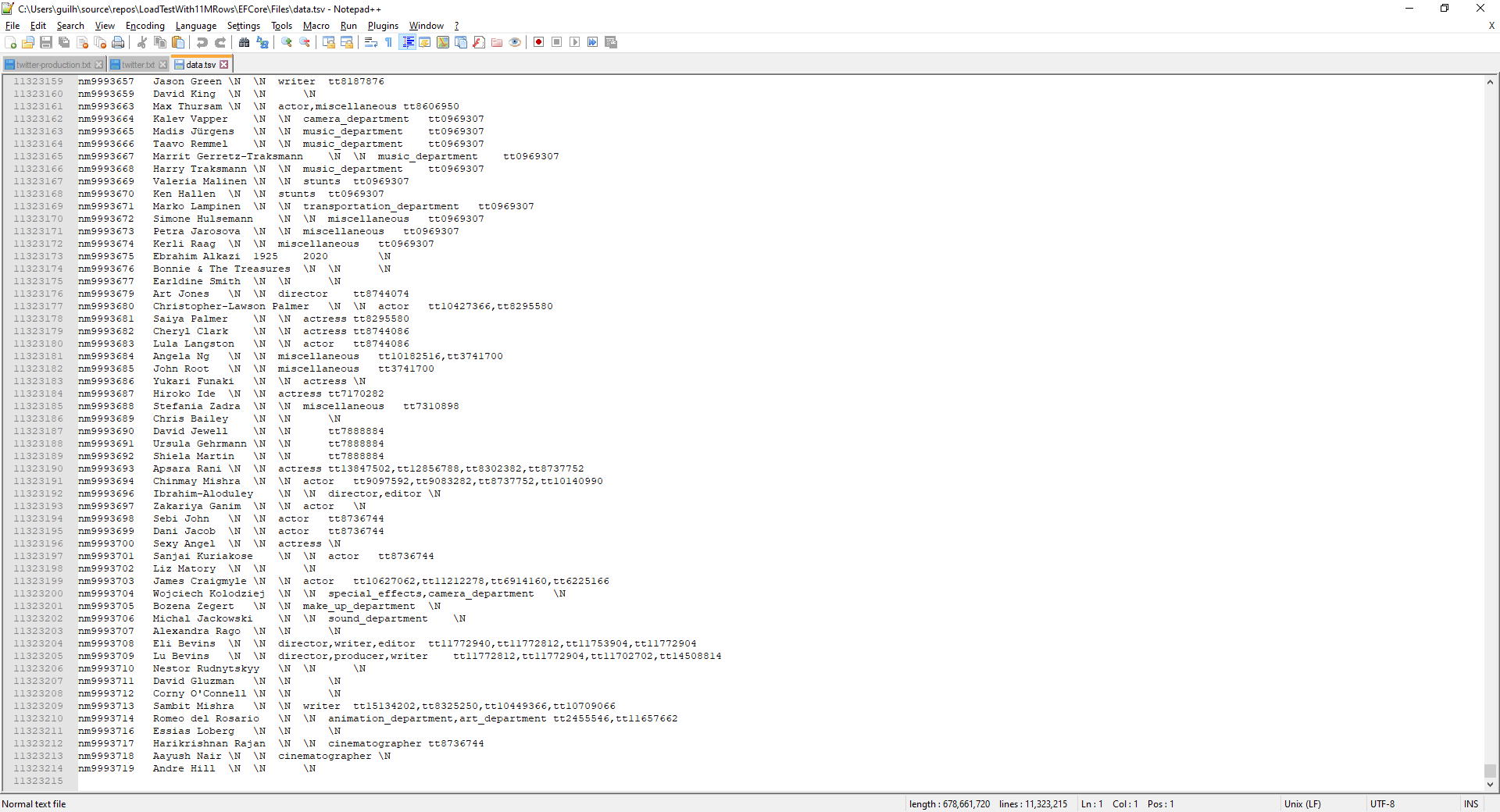

In search for a huge database for the task, I came across a TSV text file from IMDb, that can be downloaded here, just look for the name.basics.tsv.gz link (that is the file we are dealing with). The layout of the information can be found here. So, basically the name.basics.tsv.gz file contains the respective fields delimited by TAB (\t):

- nconst (string) - alphanumeric unique identifier of the name/person;

- primaryName (string)– name by which the person is most often credited;

- birthYear – in YYYY format;

- deathYear – in YYYY format if applicable, else '\N';

- primaryProfession (array of strings)– the top-3 professions of the person, separated by commas;

- knownForTitles (array of tconsts) – titles the person is known for, separated by commas.

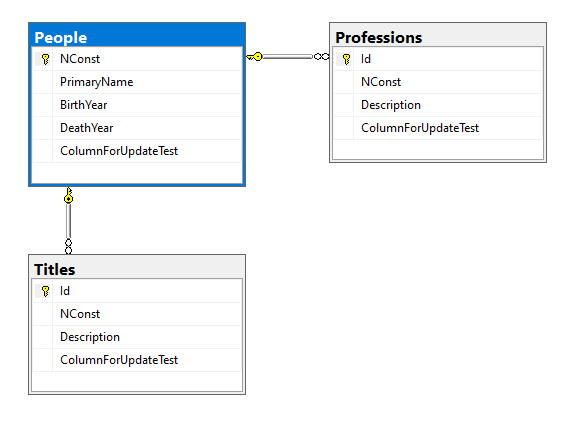

The server used to insert and process all the information is the SQL Server 2019 (I believe you can import the LoadTest.bacpac file in older versions though). I created 3 tables to hold the information: the main table People, a secondary table Professions and a secondary table Titles. There's a relationship from People to Professions, same thing here for the Titles table. You can see their layout here:

And to keep everything tight, I also created 3 databases with the same tables in my local environment: LoadTest_EFCore, LoadTest_NHibernate and LoadTest_AdoNet. If you want to, you can run the 3 tests with the same database.

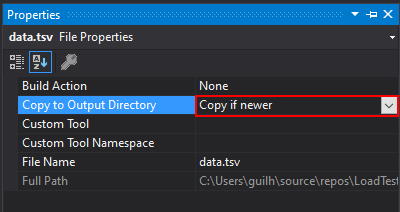

You'll have to create a directory called Files in each of the solutions and paste the data.tsv file in there (don't change the name of the file, it's hard-coded). And don't forget to set Copy if newer in the file properties.

Now, let's talk about the load and performance test themselves. They are really simple actually... the first one imports all 41M rows into the tables, the second one retrieves all rows from all tables and updates a column called ColumnForUpdateTest and the last one, also retrieves all rows from all the tables, but deletes them from the database.

Please, clone the repository here. You'll find 3 console projects with the data.tsv file in it and a database bacpac file at the root of the solution folder.

The machine used for all tests is an AMD Threadripper 1950X with 32GB of DDR4 RAM and a 512GB ADATA SX6000NP M.2 NVMe.

Load Test (Importing 41M rows)

Let's start by taking a look at the code that will import all the rows, separated by EFCore, NHibernate and AdoNet. The EFCore one is really simple, like I said. It reads the TSV text file, iterates through the lines, creates the objects and flushes the data into the database for each row. I tried delaying calling the db.SaveChanges() method (putting it outside the loop), but the memory required to run the test was humongous.

Don't run the tests from VS (it's gonna take forever). Build the projects and run direct from the bin directory.

#region Loading all rows

//first: load all rows from the text file into the database table

var filepath = Path.GetFullPath("Files\\data.tsv");

Console.WriteLine("Loading all rows from data.tsv file: started {0}", DateTime.Now);

using (var stream = new StreamReader(filepath))

{

stream.ReadLine();

while (stream.EndOfStream == false)

{

using (var db = new LoadTestContext())

{

var line = stream.ReadLine();

if (string.IsNullOrWhiteSpace(line) == false)

{

var fields = line.Split('\t');

var person = new Person()

{

NConst = fields[0],

PrimaryName = fields[1],

BirthYear = fields[2] == String.Empty || fields[2] == @"\N" ? null : short.Parse(fields[2]),

DeathYear = fields[3] == String.Empty || fields[3] == @"\N" ? null : short.Parse(fields[3]),

};

if (string.IsNullOrWhiteSpace(fields[4]) == false && fields[4] != @"\N")

{

var professions = fields[4].Split(',');

foreach (var profession in professions)

{

person.PrimaryProfession.Add(new Profession()

{

Description = profession,

});

}

}

if (string.IsNullOrWhiteSpace(fields[5]) == false && fields[5] != @"\N")

{

var titles = fields[5].Split(',');

foreach (var title in titles)

{

person.KnownForTitles.Add(new Title()

{

Description = title,

});

}

}

db.People.Add(person);

db.SaveChanges();

}

}

}

}

Console.WriteLine("Loading all rows from data.tsv file: finished {0}", DateTime.Now);

#endregion

The piece of code took 4 hours and 12 minutes average to run. Not bad, not bad at all. Let's see how NHibernate does it.

And the NHibernate code does the same:

#region Loading all rows

//first: load all rows from the text file into the database table

var filepath = Path.GetFullPath("Files\\data.tsv");

Console.WriteLine("Loading all rows from data.tsv file: started {0}", DateTime.Now);

using (var stream = new StreamReader(filepath))

{

stream.ReadLine();

while (stream.EndOfStream == false)

{

var line = stream.ReadLine();

if (string.IsNullOrWhiteSpace(line) == false)

{

var fields = line.Split('\t');

var person = new Person()

{

NConst = fields[0],

PrimaryName = fields[1],

BirthYear = fields[2] == String.Empty || fields[2] == @"\N" ? (short?)null : short.Parse(fields[2]),

DeathYear = fields[3] == String.Empty || fields[3] == @"\N" ? (short?)null : short.Parse(fields[3]),

};

if (string.IsNullOrWhiteSpace(fields[4]) == false && fields[4] != @"\N")

{

var professions = fields[4].Split(',');

foreach (var profession in professions)

{

person.PrimaryProfession.Add(new Profession()

{

NConst = person.NConst,

Description = profession,

});

}

}

if (string.IsNullOrWhiteSpace(fields[5]) == false && fields[5] != @"\N")

{

var titles = fields[5].Split(',');

foreach (var title in titles)

{

person.KnownForTitles.Add(new Title()

{

NConst = person.NConst,

Description = title,

});

}

}

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

session.Save(person);

session.Flush();

session.Close();

}

foreach (var profession in person.PrimaryProfession)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

session.Save(profession);

session.Flush();

session.Close();

}

}

foreach (var title in person.KnownForTitles)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

session.Save(title);

session.Flush();

session.Close();

}

}

}

}

}

Console.WriteLine("Loading all rows from data.tsv file: finished {0}", DateTime.Now);

goto readline;

#endregion

NHibernate took approximately 4 hours and 30 minutes for the task. Now, let's see the benchmark for the pure ADO.NET solution.

The code basically does the same thing, so let's look at the methods that insert the data into the database:

public static void InsertPerson(SqlConnection conn, Person p)

{

if (conn != null)

{

using (var cmd = conn.CreateCommand())

{

cmd.CommandText = "INSERT INTO [dbo].People (NConst,PrimaryName,BirthYear,DeathYear) VALUES (@NConst,@PrimaryName,@BirthYear,@DeathYear);";

cmd.Parameters.Add(new SqlParameter { ParameterName = "@NConst", Value = p.NConst });

cmd.Parameters.Add(new SqlParameter { ParameterName = "@PrimaryName", Value = p.PrimaryName });

cmd.Parameters.Add(new SqlParameter { ParameterName = "@BirthYear", Value = p.BirthYear ?? (object)DBNull.Value });

cmd.Parameters.Add(new SqlParameter { ParameterName = "@DeathYear", Value = p.DeathYear ?? (object)DBNull.Value });

cmd.ExecuteNonQuery();

}

foreach (var profession in p.PrimaryProfession) InsertProfession(conn, profession);

foreach (var title in p.KnownForTitles) InsertTitle(conn, title);

}

}

public static int InsertProfession(SqlConnection conn, Profession p)

{

if (conn != null)

{

using (var cmd = conn.CreateCommand())

{

cmd.CommandText = "INSERT INTO [dbo].Professions (NConst,Description) VALUES (@NConst,@Description); SELECT IDENT_CURRENT('Professions');";

cmd.Parameters.Add(new SqlParameter { ParameterName = "@NConst", Value = p.NConst });

cmd.Parameters.Add(new SqlParameter { ParameterName = "@Description", Value = p.Description });

p.Id = Convert.ToInt32(cmd.ExecuteScalar());

return p.Id;

}

}

return 0;

}

public static int InsertTitle(SqlConnection conn, Title t)

{

if (conn != null)

{

using (var cmd = conn.CreateCommand())

{

cmd.CommandText = "INSERT INTO [dbo].Titles (NConst,Description) VALUES (@NConst,@Description); SELECT IDENT_CURRENT('Titles');";

cmd.Parameters.Add(new SqlParameter { ParameterName = "@NConst", Value = t.NConst });

cmd.Parameters.Add(new SqlParameter { ParameterName = "@Description", Value = t.Description });

t.Id = Convert.ToInt32(cmd.ExecuteScalar());

return t.Id;

}

}

return 0;

}

The pure ADO.NET imported the rows in average 2 hours and 11 minutes, almost half compared to the ORMs.

Update Test (Modifying 41M rows)

Again the code is separated by EFCore, NHibernate and AdoNet. For updating, I tried loading all objects in memory first, but an exception would pop up, so I decided to do it in batches of 1M rows:

#region Updating all rows

update:

//second: updates all rows

Console.WriteLine("Updating all rows from the People, Professions and Titles tables: started {0}", DateTime.Now);

{

var count = new LoadTestContext().People.Count();

var index = 0;

while (index < count)

{

var people = new LoadTestContext().People

.Include(x => x.PrimaryProfession)

.Include(x => x.KnownForTitles)

.Skip(index)

.Take(1000000)

.ToList();

foreach (var person in people)

{

using (var db = new LoadTestContext())

{

person.ColumnForUpdateTest = new string('0', 50);

db.Attach(person);

db.Entry(person).Property(x => x.ColumnForUpdateTest).IsModified = true;

db.SaveChanges();

foreach (var title in person.KnownForTitles)

{

title.ColumnForUpdateTest = new string('1', 50);

db.Attach(title);

db.Entry(title).Property(x => x.ColumnForUpdateTest).IsModified = true;

db.SaveChanges();

}

foreach (var profession in person.PrimaryProfession)

{

profession.ColumnForUpdateTest = new string('2', 50);

db.Attach(profession);

db.Entry(profession).Property(x => x.ColumnForUpdateTest).IsModified = true;

db.SaveChanges();

}

}

}

index += 1000000;

}

}

Console.WriteLine("Updating all rows from the People, Professions and Titles tables: finished {0}", DateTime.Now);

#endregion

EFCore took an average of 5 hours and 14 minutes to update all 41M rows. I think it's a win, because EFCore was the only method that loaded the PrimaryProfession and the KnowForTitles objects fast enough. Now, let's have a look at how NHibernate deals with this situation. Again, NHibernate loads a batch of 1M rows for each time:

update:

//second: updates all rows

Console.WriteLine("Updating all rows from the People table: started {0}", DateTime.Now);

{

var count = LoadTestContext.GetSessionFactory().OpenSession().Query<Person>().Count();

var index = 0;

while (index < count)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

var people = session

.Query<Person>()

.Skip(index)

.Take(1000000)

.ToList();

foreach (var person in people)

{

person.ColumnForUpdateTest = new string('0', 50);

session.SaveOrUpdate(person);

session.Flush();

}

session.Close();

}

index += 1000000;

}

count = LoadTestContext.GetSessionFactory().OpenSession().Query<Profession>().Count();

index = 0;

while (index < count)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

var professions = session

.Query<Profession>()

.Skip(index)

.Take(1000000)

.ToList();

foreach (var profession in professions)

{

profession.ColumnForUpdateTest = new string('0', 50);

session.SaveOrUpdate(profession);

session.Flush();

}

index += 1000000;

}

}

count = LoadTestContext.GetSessionFactory().OpenSession().Query<Title>().Count();

index = 0;

while (index < count)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

var titles = session

.Query<Title>()

.Skip(index)

.Take(1000000)

.ToList();

foreach (var title in titles)

{

title.ColumnForUpdateTest = new string('0', 50);

session.SaveOrUpdate(title);

session.Flush();

}

index += 1000000;

}

}

}

NHibernate took an average of 3 hours and 18 minutes to update all 41M rows. The only difference between EFCore and NHibernate is that the Professions and Titles are loaded and updated separately (loading PrimaryProfession and KnownForTitles objects inside the Person class proved to be too slow). Now, let's go the pure ADO.NET solution.

#region Updating all rows

update:

//second: updates all rows

Console.WriteLine("Updating all rows from the People, Professions and Titles tables: started {0}", DateTime.Now);

{

using (var conn = new SqlConnection(LoadTestContext.ConnectionString))

{

conn.Open();

var count = LoadTestContext.CountPeople(conn);

var index = 0;

while (index < count)

{

var people = LoadTestContext.SelectPeople(conn, index, 1000000);

foreach (var person in people)

{

person.ColumnForUpdateTest = new string('0', 50);

LoadTestContext.UpdatePerson(conn, person);

}

index += 1000000;

}

count = LoadTestContext.CountProfessions(conn);

index = 0;

while (index < count)

{

var professions = LoadTestContext.SelectProfessions(conn, index, 1000000);

foreach (var profession in professions)

{

profession.ColumnForUpdateTest = new string('0', 50);

LoadTestContext.UpdateProfession(conn, profession);

}

index += 1000000;

}

count = LoadTestContext.CountTitles(conn);

index = 0;

while (index < count)

{

var titles = LoadTestContext.SelectTitles(conn, index, 1000000);

foreach (var title in titles)

{

title.ColumnForUpdateTest = new string('0', 50);

LoadTestContext.UpdateTitle(conn, title);

}

index += 1000000;

}

conn.Close();

}

}

Console.WriteLine("Updating all rows from the People, Professions and Titles tables: finished {0}", DateTime.Now);

#endregion

ADO.NET took an average of 1 hour and 54 minutes. The same NHibernate approach was taken here, the Professions and Titles were updated separately (loading the objects seem ed to be too slow). Now, let's have a look at the deletion procedure!

Delete Test (Excluding 41M rows)

EFCore delete test didn't go so well, even though the code is pretty straightforward, loading up by batches of a 1M rows for each time. The performance was too slow, the whole deletion process would take more than 47 days! Unfortunately, I didn't finish the test, only made my estimates after running it for two hours.

#region Deleting all rows

delete:

Console.WriteLine("Deleting all rows from the People, Professions and Titles tables: started {0}", DateTime.Now);

{

var count = new LoadTestContext().People.Count();

var index = 0;

while (index < count)

{

var people = new LoadTestContext().People

.Include(x => x.PrimaryProfession)

.Include(x => x.KnownForTitles)

.Take(1000000)

.ToList();

foreach (var person in people)

{

using (var db = new LoadTestContext())

{

db.RemoveRange(person.KnownForTitles);

db.RemoveRange(person.PrimaryProfession);

db.Remove(person);

db.SaveChanges();

}

}

index += 1000000;

}

}

Console.WriteLine("Deleting all rows from the People, Professions and Titles tables: finished {0}", DateTime.Now);

#endregion

Here's the code for the NHibernate, almost the same as the Update test.

#region Deleting all rows

delete:

Console.WriteLine("Deleting all rows from the People table: started {0}", DateTime.Now);

{

var count = LoadTestContext.GetSessionFactory().OpenStatelessSession().Query<Profession>().Count();

var index = 0;

while (index < count)

{

var professions = LoadTestContext.GetSessionFactory()

.OpenStatelessSession()

.Query<Profession>()

.Skip(0)

.Take(1000000)

.ToList();

foreach (var profession in professions)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

session.Delete(profession);

session.Flush();

session.Close();

}

}

index += 1000000;

}

count = LoadTestContext.GetSessionFactory().OpenStatelessSession().Query<Title>().Count();

index = 0;

while (index < count)

{

var titles = LoadTestContext.GetSessionFactory()

.OpenStatelessSession()

.Query<Title>()

.Skip(0)

.Take(1000000)

.ToList();

foreach (var title in titles)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

session.Delete(title);

session.Flush();

session.Close();

}

}

index += 1000000;

}

count = LoadTestContext.GetSessionFactory().OpenStatelessSession().Query<Person>().Count();

index = 0;

while (index < count)

{

var people = LoadTestContext.GetSessionFactory()

.OpenStatelessSession()

.Query<Person>()

.Skip(0)

.Take(500000)

.ToList();

foreach (var person in people)

{

using (var session = LoadTestContext.GetSessionFactory().OpenSession())

{

session.Delete(person);

session.Flush();

session.Close();

}

}

index += 500000;

}

}

Console.WriteLine("Deleting all rows from the People table: finished {0}", DateTime.Now);

#endregion

NHibernate did well in this test, averaging 6 hours. I only had to reduce the size of the batch for the People deletion (from 1M to 0.5M rows), because an OutOfMemory exception was being thrown. Now, let's see how ADO.NET handles the deletion test.

#region Deleting all rows

delete:

Console.WriteLine("Deleting all rows from the People, Professions and Titles tables: started {0}", DateTime.Now);

{

using (var conn = new SqlConnection(LoadTestContext.ConnectionString))

{

conn.Open();

var count = LoadTestContext.CountProfessions(conn);

var index = 0;

while (index < count)

{

var professions = LoadTestContext.SelectProfessions(conn, 0, 1000000);

foreach (var profession in professions)

{

LoadTestContext.DeleteProfession(conn, profession);

}

index += 1000000;

}

count = LoadTestContext.CountTitles(conn);

index = 0;

while (index < count)

{

var titles = LoadTestContext.SelectTitles(conn, 0, 1000000);

foreach (var title in titles)

{

LoadTestContext.DeleteTitle(conn, title);

}

index += 1000000;

}

count = LoadTestContext.CountPeople(conn);

index = 0;

while (index < count)

{

var people = LoadTestContext.SelectPeople(conn, 0, 1000000, false);

foreach (var person in people)

{

LoadTestContext.DeletePerson(conn, person);

}

index += 1000000;

}

conn.Close();

}

}

Console.WriteLine("Deleting all rows from the People, Professions and Titles tables: finished {0}", DateTime.Now);

#endregion

Again, ADO.NET did the best in the deletion test, averaging 2 hours and 7 minutes.

Conclusion

Nothing beats pure ADO.NET, if you don't care having to code all the CRUD operations. It's a shame that EF didn't finish the deletion test, but there's an alternative: you can always do logic deletions instead and then creating a database routine to actually delete the rows (which is much faster). What I like about EF is that when you include objects of a class when selecting the entities, the process is very fast (thing that NHibernate and ADO.NET weren't prepared to do). NHibernate did well in the tests, I would recommend both of the ORMs.